Table Of Content

If you want to analyze unstructured or semi-structured data, the data warehouse won’t work. We’re seeing more companies moving to the data lakehouse architecture, which helps to address the above. The open data lakehouse allows you to run warehouse workloads on all kinds of data in an open and flexible architecture.

When does a data warehouse typically employ a multi-tiered design approach?

MarkLogic is a fully managed, fully automated cloud service to integrate data from silos. When you first hear the term "data warehouse," you might think of a few other data terms like "data lake," "database," or "data mart." However, those things are different because they have a more limited scope. It includes primary and foreign keys, as well as the data types for each column. This means that the requirements are gathered and data warehouse is developed based on the organisation as a whole, instead of individual areas like the Kimball method.

Architecture Types

“The more complex things get, the simpler you need to make them.” Planning doesn’t have take months, and it helps for people to understand that systems talk to one another, so they can see what questions to answer. “Some of these very basic things that are so critical, like whether a sales rep has more than one region. You can just get together and quickly figure them out before you go running,” she said. Once data is added to the validation stage of the data pipeline, it can be validated against set constraints if they exist. For example, if you set up a field constraint which expects an integer, but the data being passed is a string it may suggest that the dataset contains errors and should undergo further validation. This stage acts like another filtering stage to ensure the right data is progressing in the data pipeline.

Approaches to Building a Data Warehouse

Every department needs to understand the purpose of the data warehouse, how it benefits them, and what kinds of results they can expect from your warehousing solution. In a statement provided to TechCrunch, UnitedHealth spokesperson Tyler Mason confirmed the company paid the cybercriminals. “A ransom was paid as part of the company’s commitment to do all it could to protect patient data from disclosure.” The company would not confirm the amount it paid.

Once the data warehouse is deployed, it is essential to establish a maintenance plan to ensure it continues functioning smoothly and providing value to the organization. Maintenance tasks may include monitoring performance, updating software and hardware, query optimization, and ensuring data quality. It's important to regularly examine and revise the data warehouse design to ensure it meets the organization's changing needs.

A data warehouse system enables an organization to run powerful analytics on large amounts of data (petabytes and petabytes) in ways that a standard database cannot. You design and build your data warehouse based on your reporting requirements. After you identified the data you need, you design the data to flow information into your data warehouse. Incorporate data lineage and auditing features in your data warehouses to track changes, maintain data quality, and comply with regulations.

Data warehouse system analysis and data governance design

For example, if a department accesses one type of data from a data silo and another type from a data warehouse, it will lead to confusion, redundancy, and inefficiency. Each data mart should be implemented in its entirety as data is gradually migrated. The key functions of data warehouse software include processing and managing data so that meaningful insights can be drawn from the raw information.

It results in a single data warehouse without the smaller data warehouses in between, but it may take longer to see the benefits. The design is made to optimise the performance of SELECT queries across more data. The INSERTs and UPDATEs happen rarely (often overnight as a big batch) and the SELECT statements happen all the time. Chamitha is an IT veteran specializing in data warehouse system architecture, data engineering, business analysis, and project management. Analysis tools can give decision-makers insights into the integrity of their data that manual labour can’t replicate, but the process of quality assurance is costly and labour-intensive. If a data warehouse is like an ocean of data—vast, diverse, and containing data from all sources—a data mart is like a river—based on a single ecosystem and all flowing in the same direction.

Data warehouse and business intelligence technology consolidation using AWS Amazon Web Services - AWS Blog

Data warehouse and business intelligence technology consolidation using AWS Amazon Web Services.

Posted: Wed, 06 Jul 2022 07:00:00 GMT [source]

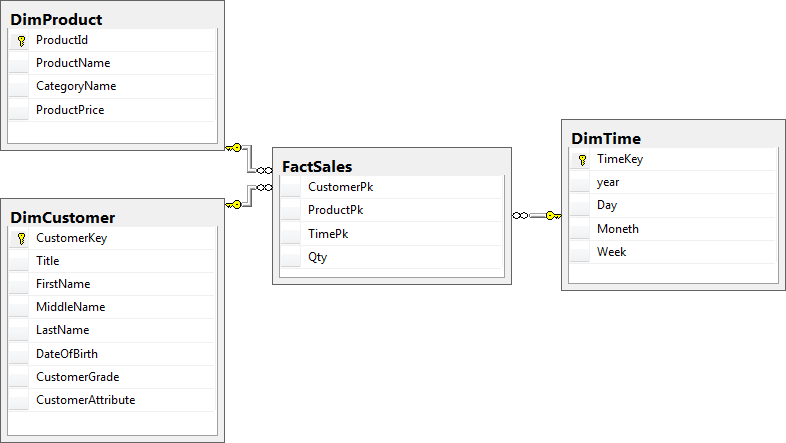

Data Model and Schema

The top-down approach is the most common way for large organisations to build data centres. Since it’s a strong, high-integrity model and data marts can be easily created from the warehouse, it’s a natural fit for organisations with large data needs. However, the expense of upkeep and maintaining an increasingly complex system is prohibitive for some organisations. In contrast to Inmon’s approach, the data warehouse contains a web of data marts. Data is organised into “star schemas” where data items are used consistently across different data marts. Importantly, these logical models are normalised (the process of structuring databases according to specific norms), and the physical data warehouse is built to reflect the normalised structure of the warehouse.

Its counterpart Extract, Load, Transfer (ELT) negatively impacts the performance of most custom-built warehouses since data is loaded directly into the warehouse before data organization and cleansing occur. However, there might be other data integration use cases that suit the ELT process. Integrate.io not only executes ETL but can handle ELT, Reverse ETL, and Change Data Capture (CDC), as well as provide data observability and data warehouse insights. It involves labeling to map the volume of columns to their physical location. The next factor in choosing data warehouse design is the complexity of data sources.

ETL tools, such as Informatica PowerCenter, Microsoft SQL Server Integration Services (SSIS), and Talend, provide graphical interfaces and pre-built components for designing and executing ETL workflows. These tools automate many of the manual tasks involved in data extraction, transformation, and loading. Additionally, data integration platforms, such as Apache Kafka and Apache Nifi, offer real-time streaming capabilities that enable near-instantaneous data updates and integration. Choosing the right tools and techniques depends on the specific requirements and resources of your organization. The primary goal of data warehousing is to provide decision-makers with timely and accurate information to support data-driven decision-making.

No comments:

Post a Comment